- AWS Solutions Library›

- Guidance for Product Carbon Footprinting on AWS

Guidance for Product Carbon Footprinting on AWS

Overview

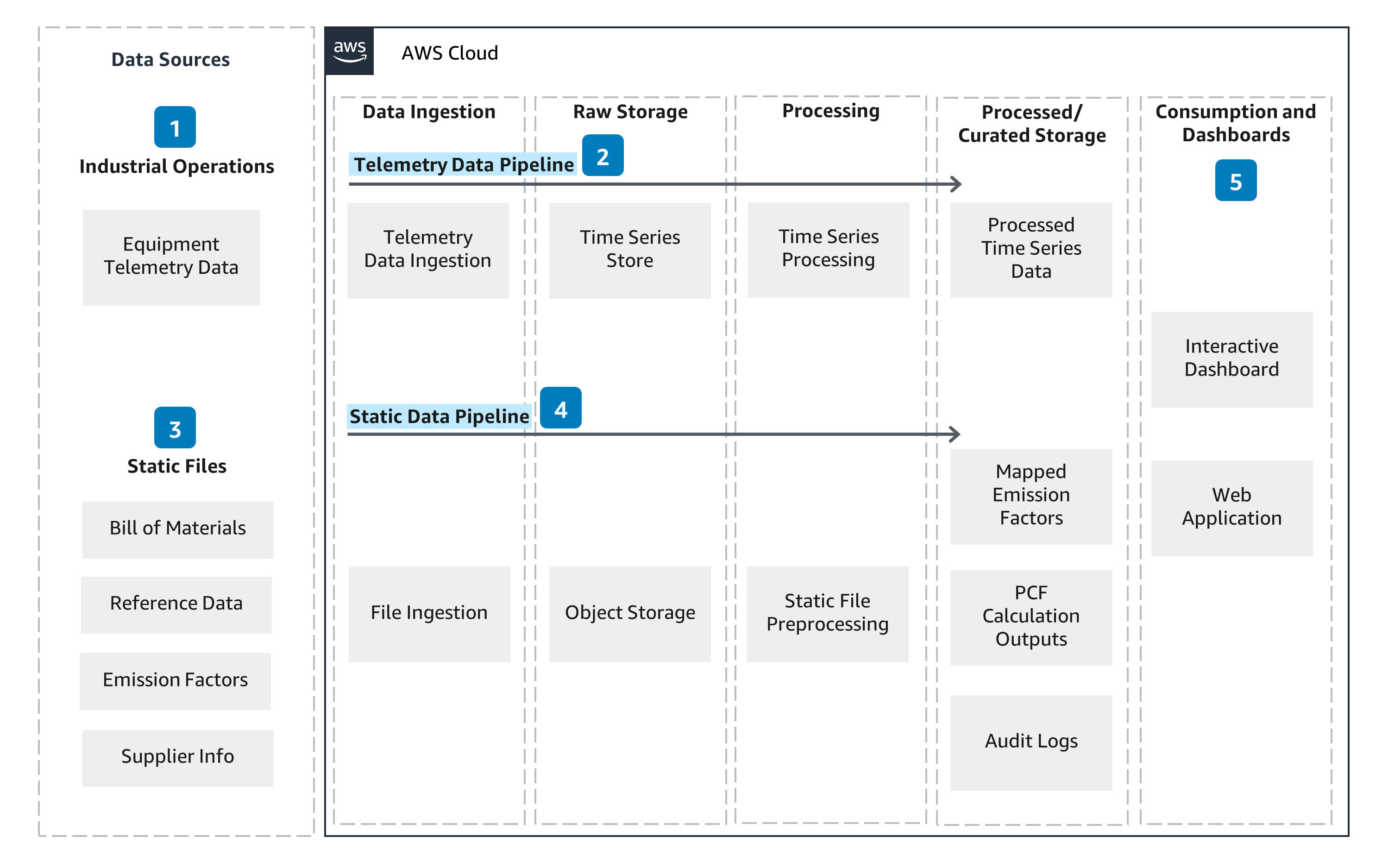

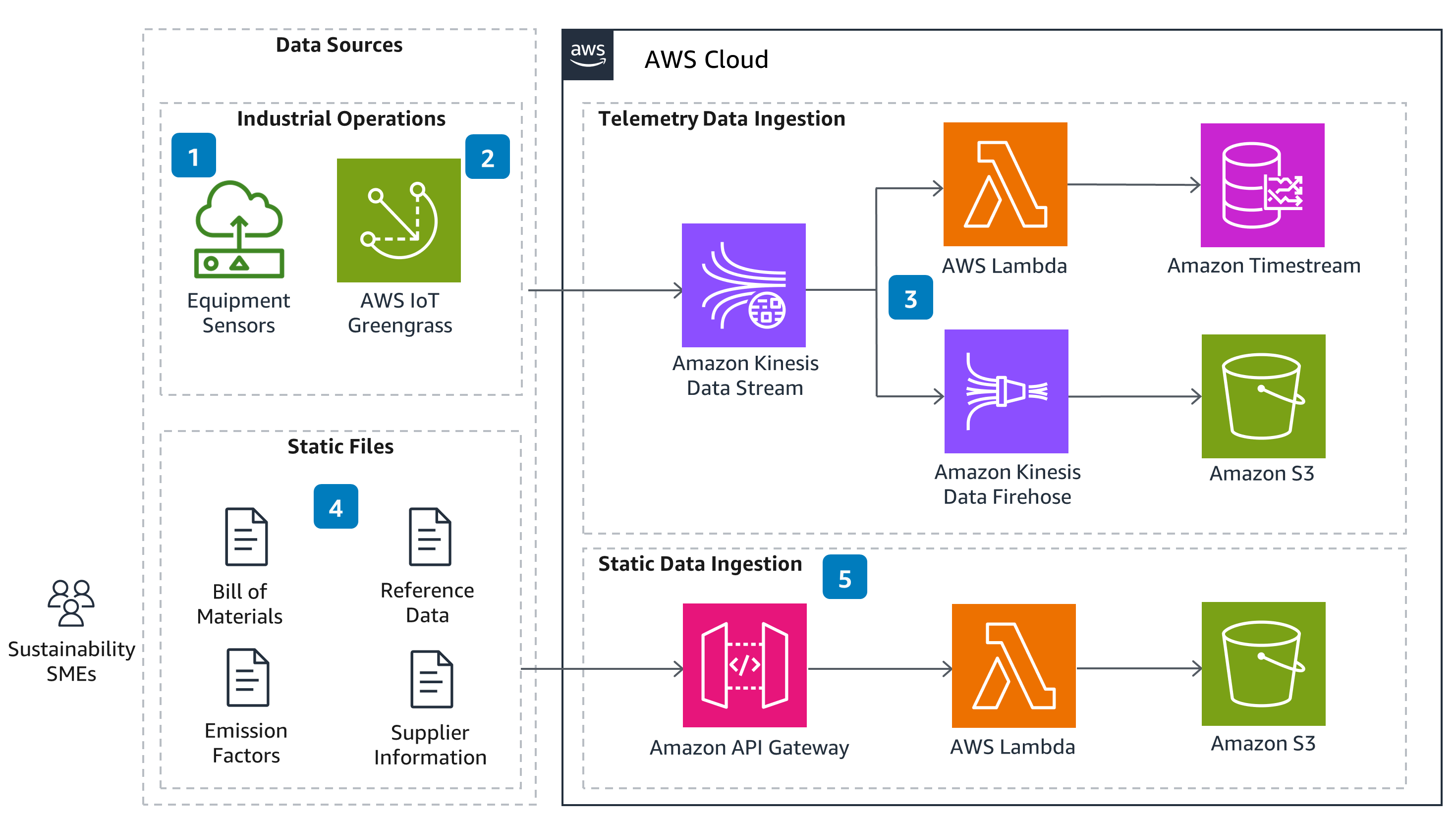

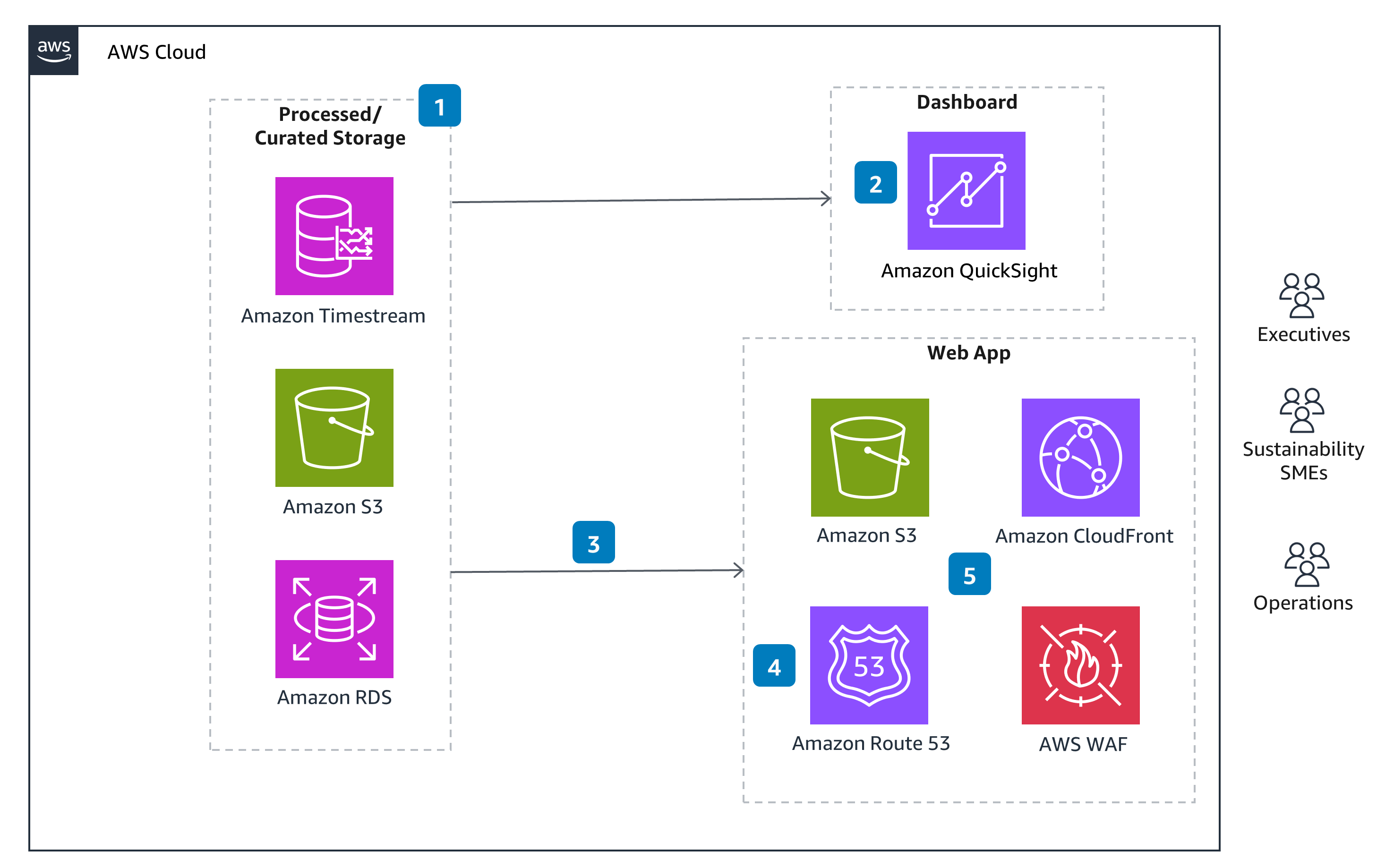

This Guidance helps customers scale product carbon footprint (PCF) tracking, reduce the manual effort involved with data collection and calculation, and provide transparent and auditable PCFs for reporting. The architecture pairs Internet of Things (IoT) sensor data from a manufacturing facility with product information and emission factors. An interactive dashboard uses this data to track product-level energy and carbon footprint in addition to benchmarking environmental performance across equipment and sites. With this Guidance, customers can identify hotspots and best practices to lower their PCF and manufacturing costs.

Please note: This solution by itself will not make a customer compliant with any product carbon footprint frameworks, standards, or regulations. It provides the foundational infrastructure from which additional complementary solutions can be integrated.

How it works

Overview

Please note: This is an overview architecture. For diagrams highlighting different aspects of this architecture, open the other tabs.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

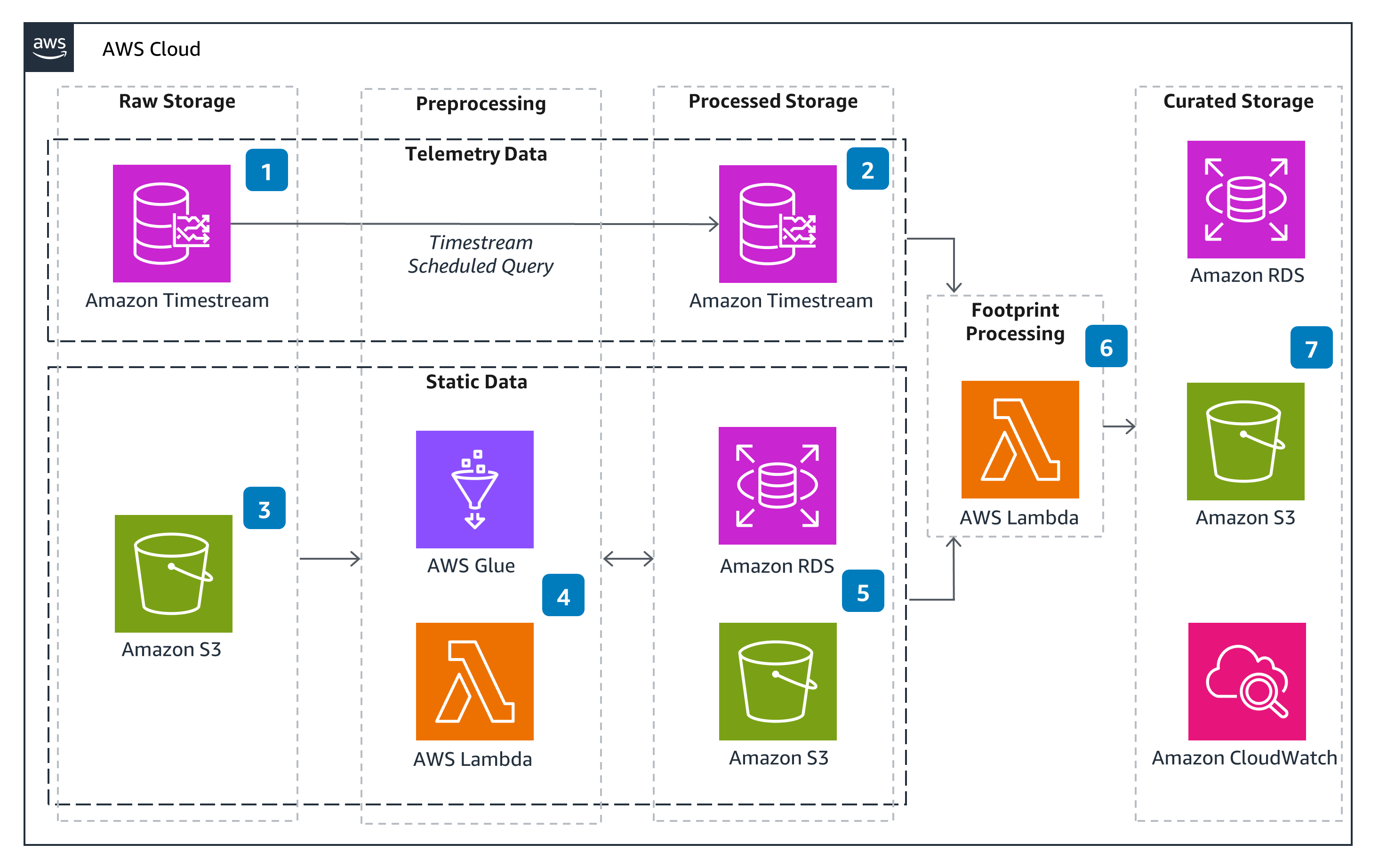

CloudWatch provides centralized logging with metrics and alarms across all deployed services. These metrics and alarms can raise alerts for operational anomalies.

Resources are protected using AWS Identity and Access Management (IAM) policies and principles. Use least privilege access and role-based access to grant permissions to operators. AWS Key Management Service (KMS) encrypts data at rest. HTTPS endpoints with transport layer security (TLS) provide encryption for in-transit data, including service endpoints and API Gateway endpoints.

This Guidance uses serverless services whenever possible, such as API Gateway, Lambda, and Timestream, enabling auto-scaling to respond to fluctuating demands. This Guidance also uses AWS services such as Amazon S3, Amazon RDS, and Timestream to provide built-in functionality for data backup and recovery.

This Guidance uses serverless managed services, such as Lambda, that automatically scale in response to changing demand, reducing resource overhead. Additionally, customers can apply different analytics tools to their data stored in Amazon S3, depending on their needs.

This Guidance relies on serverless and fully managed services, such as Lambda, Amazon S3, and Timestream, which automatically scale according to workload demand. As a result, you only pay for the resources you use.

Amazon S3 lifecycle policies can automatically move data to more energy-efficient storage classes, enforce deletion timelines, and minimize overall storage requirements. Timestream allows for data to automatically be moved from the memory tier to the magnetic tier to minimize cost. This Guidance also uses managed, serverless technologies such as AWS Glue, Lambda, and Timestream to help ensure hardware is minimally provisioned to meet demand.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages