- AWS Solutions Library›

- Guidance for Near Real-Time Fraud Detection with Graph Neural Network on AWS

Guidance for Near Real-Time Fraud Detection with Graph Neural Network on AWS

Overview

This Guidance demonstrates an end-to-end, near real-time anti-fraud system based on deep learning graph neural networks. This blueprint architecture uses Deep Graph Library (DGL) to construct a heterogeneous graph from tabular data and train a Graph Neural Network (GNN) model to detect fraudulent transactions.

How it works

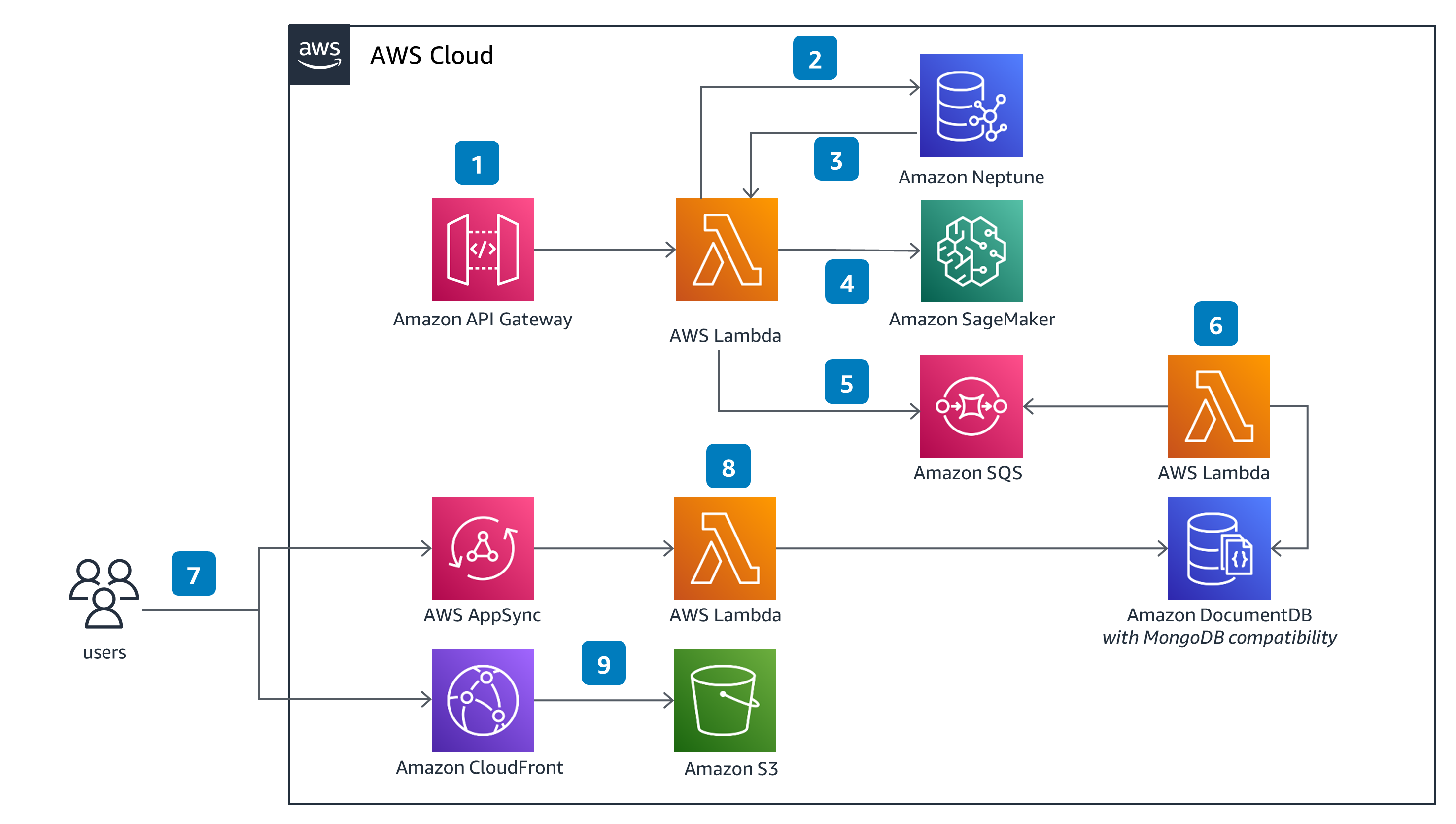

Near Real-Time Fraud Detection

These technical details feature an architecture diagram to illustrate how to effectively use this solution. The architecture diagram shows the key components and their interactions, providing an overview of the architecture's structure and functionality step-by-step.

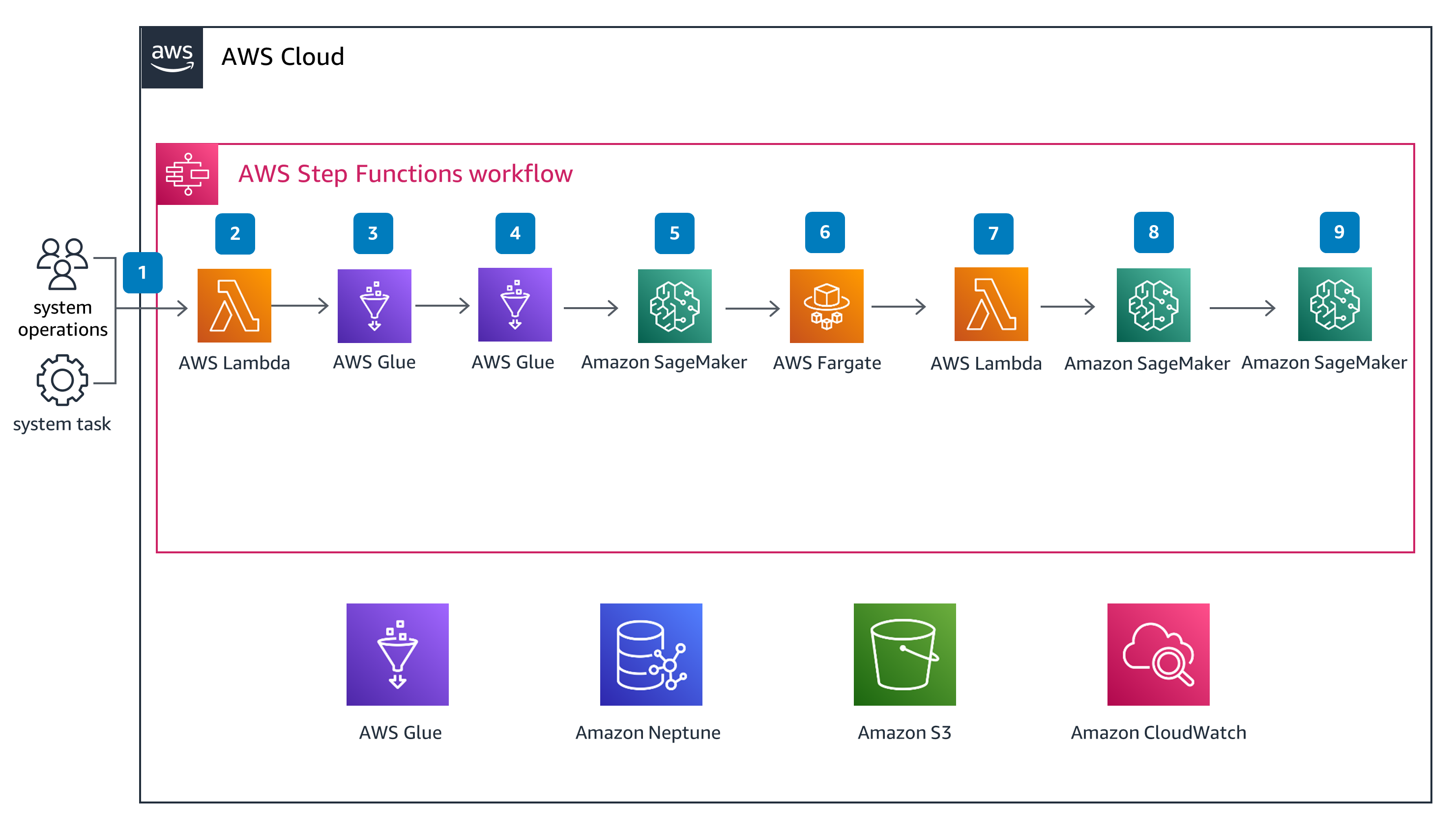

Offline Model Training

These technical details feature an architecture diagram to illustrate how to effectively use this solution. The architecture diagram shows the key components and their interactions, providing an overview of the architecture's structure and functionality step-by-step.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

This Guidance uses AWS Serverless services like AWS Glue, SageMaker, AWS Fargate, Lambda as compute resources for processing data, training models, serving the API functionalities, and keeping billing to pay-as-you-go pricing. One of the data stores is designed using Amazon S3, providing a low total cost of ownership for storing and retrieving data. The business dashboard uses CloudFront, Amazon S3 and AWS AppSync, Lambda to implement the web application.

API Gateway and Lambda provide a protection layer when invoking Lambda functions through an outbound API. All the proposed services support integration with AWS Identity and Access Management (IAM), which can be used to control access to resources and data. All traffic in the VPC between services are controlled by security groups.

API Gateway, Lambda, AWS Step Functions, AWS Glue, Amazon S3, Neptune, Amazon DocumentDB, and AWS AppSync provide high availability within a Region. Customers can deploy SageMaker endpoints in a highly available manner.

All the services used in the design provide cloud watch metrics that can be used to monitor individual components of the design. MLOps pipelines orchestrated by Step Functions helps to continuously iterate the model. API Gateway and Lambda allow publishing of new versions through an automated pipeline.

This Guidance requires GNN model training for fraud detection. The performance requirements for batch processing range from minutes to hours; AWS Glue and SageMaker training jobs are designed to meet them. Neptune is a purpose-built, high-performance graph database engine. Neptune efficiently stores and navigates graph data, and uses a scale-up, in-memory optimized architecture for fast query evaluation over large graphs. Provisioned concurrency in Lambda and the HTTP API in API Gateway can support a latency requirement of less than 10 ms.

This Guidance uses the scaling behaviors of Lambda and API Gateway to reduce over-provisioning resources. It uses AWS Managed Services to maximize resource utilization and to reduce the amount of energy needed to run a given workload.